Master Data Management (MDM) and Reference Data Management (RDM)

If you would like to step into the data domain, the most significant topics anybody must recognize are Metadata, Master & Reference Data Management. If you grasp these areas, one will require 80% less time to orchestrate any Data Solution.

Let's decode it….

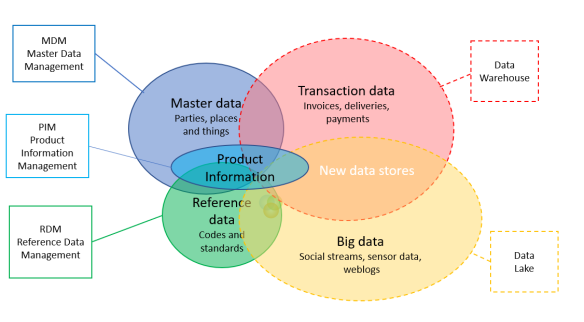

In general term, the datasets which cannot be created without a certain data/entity is Master Data. Let’s take an example of a customer as Master Data. Customer use services of a Bank. He has a Bank Account, Debit Card, Credit Card, may have a running loan etc. In a Bank all this information is in different systems. Bank Account details in One system, Debit or Credit Card details in another system and Loan in another system. If all these systems start creating and keeping Customer details like Name, DOB, ID, address etc., then there is a high chance of having same customer created multiple times across the systems with different identifiers. Now when decision makers want to see number of customers across organization, they will be seeing same person as multiple customers. MDM solve this problem by having a unique identifier for each customer across all the systems within organization. We can refer MDM as identifier for employee, customer, product, location, things, etc.

Image: https://mdmlist.com/2019/10/10/master-data-product-information-reference-data-and-other-data/

If one talk about Master Data, Reference Data is a must topic to discuss. Reference Data is very close to Master Data but small in volume, less change expectation. Referring to the same example, customer is using Debit Card service, for customer its Debit Card but for systems there might a code for Debit Card e.g., DbCrd. We can refer RDM as lists, cross-list, classification contents, codes, or descriptions for entities.

If your Master and Reference Data is not in place, your Metadata will be enormous and cannot streamline. If your Metadata is not in place, you can’t figure out Data Lineage. If your Data Lineage is not in place, your Data will become a Data Swamp. If you have a Data Swamp, you will need 80% more processing power for Data Analytics. More processing power means more infrastructure. More Infrastructure, more CAPEX, and OPEX. More TCO, lengthy ROI. Lengthy ROI, Business is not happy. Therefore, if the business is not happy then you are expendable☺.

Can Metadata, Master & Reference Data Management reduce your BI footprint by 80%? Yes, it can. For example, if a dashboard/report and describe its Metadata in such a manner that it articulates the entire story around the data points and KPIs then the next BI report generated will be much easier to develop. With proper Master and Reference Data Management in-place Metadata will not be duplicated.

Example#1

For healthier understanding referring to the Banking sector, there are always multiple customer onboarding channels in a Bank e.g., customers can be boarded from the branch, from the customer service center etc. This means each system has separate attributes to tag new customers. Now the traditional challenge is, when the same person goes to another channel of the same bank the other system doesn’t recognize him/ her and ends up creating a new customer in the system. Now the same person has 2 accounts in the same bank. This challenge can be mitigated by having a Master Data Management Solution in place where all channels will refer to one system to search for an existing customer.

Example#2

Multiple companies are producing the same kind of product e.g., Glass. Each company will have some unique code for Glass in its systems. Now if those companies end up merging there will be multiple codes for the same entity i.e., Glass. Now it’s the job of a Master Data Management Solution to bring all the codes for Glasses in one place and generate a SINGLE CODE for Glass for future use. Now Metadata and Data Lineage don’t have to run here and there for the right code for Glass.

Having Master and Reference Data Management is the first step towards having a ‘Single Source of Truth’ in your organization.

Focus of MDM and RDM

· MDM focuses on Master Data consistency within and across systems.

· RDM focusses on standardization of values and definitions within and across systems.

There are many MDM and RDM tools available in the market, each is dependent on the business nature and its requirements, but among all below are the two mostly used MDMs.

· Operational MDM focuses on managing and governing source systems specially products and customers segments.

· Analytical MDM focused on managing and governing data in downstream applications like BI tools etc.

Domains

· Multidomain MDM maintains functions across domains e.g., data quality is an extremely broad function so it’s ideal to have MDM spread across all organization domains.

· Single Domain MDM maintains functions with the same domain as sometimes some domains are very specific due to the nature of its business.

Data Sharing Architecture

· Consolidated Style primarily supports Business Intelligence (BI) and Data Warehousing (DWH) where master data is applied from downstream operational systems where it is originally created and falls under multidomain MDM.

· Registry Style is used as an index to master data that maintains its authority in a distributed manner. Its emphasis on remote data for application to application in real-time networking.

· Coexistence Style also maintains its master data authority in distributed manner, but a golden copy is maintained in a centralized Hub that sends golden copy to subscribing systems.

· Centralized Style is where master data is authored, stored, and accessed from one or more MDM hubs, either in a workflow or a transaction use case

Just like traditional data model or metadata model for Metadata, logical or canonical data models are created for Master & Reference Data Management. Another very important factor is Data Sharing Agreements where there is a requirement for formal standards, access rights to be defined for internal as well external parties.

Cheers.

Information Technology Architect

1moVery clear, thanks for sharing.

Head of Operations at Ortelius, Transforming Data Complexity into Strategic Insights

1yClearly written. Thanks for sharing. One thing that significantly improves analytics capability is how different meta data relates to one an another through taxonomies and ontologies. It provides context and structure to otherwise isolated pieces of meta data, what’s your thoughts on this?

#CloudComputing | #AWS | #DataCloud | #Snowflake | #INDIA

1yMuch needed but most ignored aspects of #datamanagement

Co-Founder & CCO at LEIT DATA (EMEA Snowflake SI Partner of the Year 2022)Data Strategist | C-Level Advisory | Data Evangelist | #Meandatastreets Author| Data Technical Author on Apress and O'Reilly | Hardcore Data Nerd!

1yFred Lardaro a use case for Operational Data Mesh?