Kafka connect VS consumer/producer

Learning Kafka can be challenging. While the foundational elements of Kafka stays pretty much the same but the surrounding frameworks evolve rapidly.

A few years ago, Kafka was simpler. It mainly had Producers and Consumers. But now, we have things like Kafka Connect and Kafka Streams. The big question is: do these new components replace or work with the Consumer and Producer?

Let's unravel the complexity!

Kafka Producer

Functionality: The Kafka Producer is responsible for publishing (producing) records to Kafka topics. It sends data to Kafka brokers, which then distribute the data across the Kafka cluster.

Use Cases: Producers are typically used in scenarios where data needs to be ingested into Kafka topics from various sources. For example, log files, sensor data, or events generated by applications can be sent to Kafka topics using producers.

Advantages:

Extremely simple for applications emitting streams of data like logs or IoT.

Limitations:

Can be extended but requires additional logic.

Kafka Consumer

Functionality: The Kafka Consumer reads (consumes) records from Kafka topics. It subscribes to one or more topics and processes the data in real-time as it becomes available.

Use Cases: Consumers are commonly employed to retrieve and process data from Kafka topics. Applications or systems that need to react to real-time events(e.g. sending emails), perform analytics, are typical consumers of Kafka.

Advantages:

Simple and works using Consumer Groups for parallel consumption.

Well-suited for stateless workloads like notifications.

Limitations:

May not be ideal for ETL.

Kafka Connect

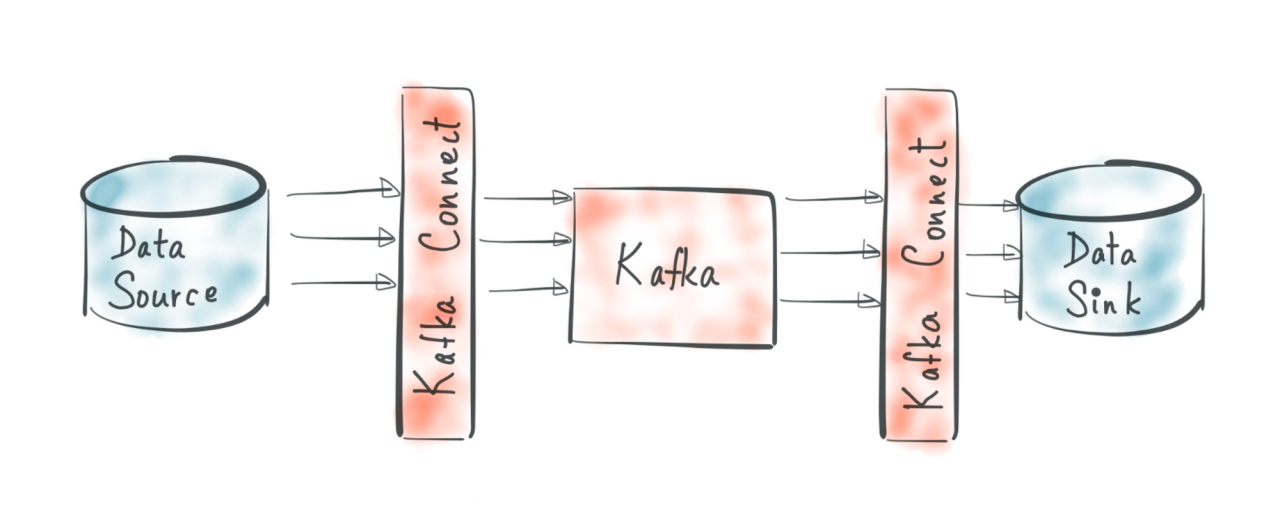

Functionality: Kafka Connect is a framework for building and running connectors that move data between Kafka and other systems. Connectors facilitate the integration of Kafka with various data sources and sinks.

Use Cases: Kafka Connect is used for building scalable, fault-tolerant data pipelines between Kafka and other systems. It simplifies the process of importing or exporting data to/from Kafka by providing pre-built connectors for popular systems like databases, Hadoop, Elasticsearch, etc.

Kafka Connect Source

Advantages:

Framework built on top of the Producer API.

Variety of available connectors for onboarding data from various sources without coding.

Limitations:

Writing a custom source connector may be necessary for proprietary systems.

Kafka Connect Sink

Advantages:

Built on top of the consumer API.

Leverages existing Kafka Connectors for streaming ETL without coding.

Limitations:

Writing a custom sink connector might be necessary if no connector is available for the target data sink.

While some interchangeability is possible, using the appropriate API for your specific needs is advisable.

In summary

This article aims to assist you in understanding which Kafka API is suitable for your use case and why.

Producers write data to Kafka topics.

Consumers read data from Kafka topics.

Kafka Connect facilitates the integration of Kafka with external systems by providing a framework for building connectors.

Together, these components form a powerful and scalable architecture for handling real-time data streaming and processing within an organization.